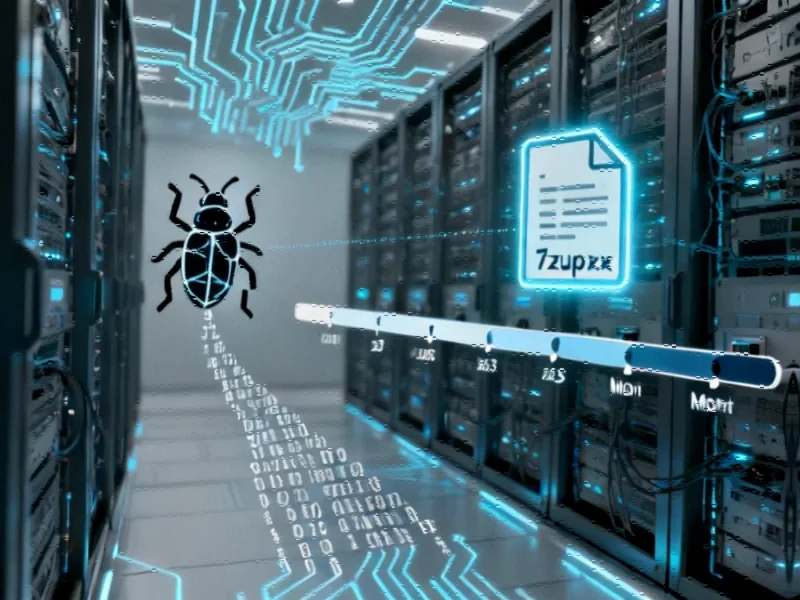

Data Poisoning Emerges as Critical Threat to AI Model Integrity

Security researchers have demonstrated that just a few hundred poisoned documents can create hidden backdoors in AI models. Financial institutions report growing concerns as regulators establish new oversight frameworks to address these emerging threats.

The Stealth Threat to AI Systems

Recent analysis from artificial intelligence researchers reveals that surprisingly small amounts of manipulated data can compromise large language models, according to reports. Sources indicate that approximately 250 poisoned documents inserted into a training dataset can create hidden “backdoors” that trigger abnormal model behavior when activated by specific phrases.