According to IEEE Spectrum: Technology, Engineering, and Science News, researchers from Carnegie Mellon University and Fujitsu have developed three new benchmarks to measure the safety and effectiveness of AI agents for enterprise use. The benchmarks were presented on January 26 at a workshop as part of the 2026 AAAI Conference on Artificial Intelligence in Singapore. The first, called FieldWorkArena, uses real-world data like manuals and on-site video to test agents on tasks like spotting safety violations in factories. The other two, ECHO and an enterprise RAG benchmark, will be made public within a month. This effort is a direct response to growing customer demand for ways to gauge if AI can be trusted to run operations without human oversight.

The Real Risk is Automation, Not Augmentation

Here’s the thing: everyone’s excited about AI agents that can *do* things, not just chat. But the leap from an AI that suggests an action to an AI that *executes* that action is massive. We’re talking about letting a piece of software fulfill a financial transaction or reconfigure a supply chain. One hallucination or misinterpretation could be catastrophic. That’s the precarious shift the article highlights, and it’s why this benchmarking work is so crucial. Companies are right to be uncertain. Handing over the keys requires a level of trust that current “chatbot accuracy” metrics just don’t provide.

FieldWorkArena Is a Step Toward Real-World Trust

FieldWorkArena is interesting because it tries to ground the test in reality. It’s not a simulation with perfect, synthetic data. It uses actual work manuals, safety regs, and on-site footage. The privacy considerations are also front and center—faces blurred, consent obtained. That’s a non-trivial hurdle for any company wanting to deploy similar tech. Think about it: if you’re a manufacturer, you can’t just point a camera at your factory floor and feed it to an AI without a plan. This benchmark acknowledges that messy reality. It’s the kind of practical, grounded testing that gives a real signal, not just a lab-grade score. You can check out the code on its GitHub repository.

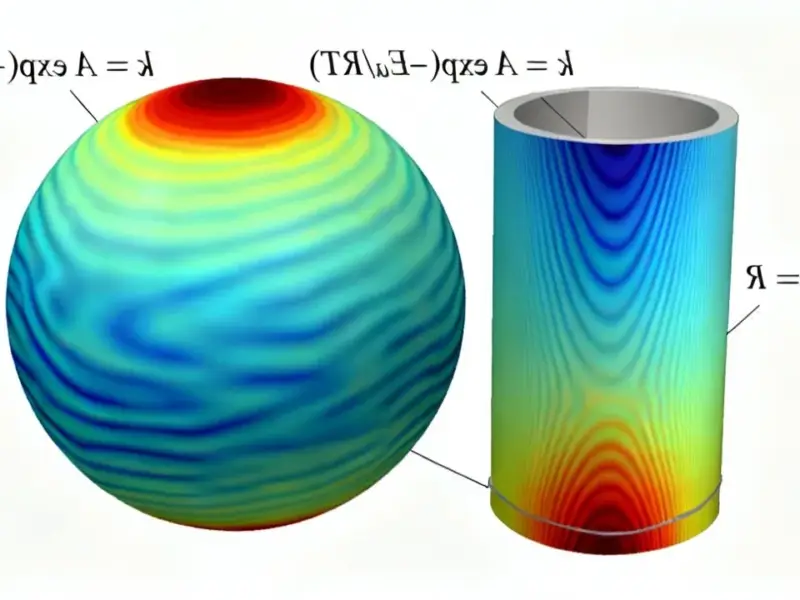

The Other Benchmarks Tackle Hallucinations and Knowledge

The other two benchmarks go after two other huge enterprise pain points. ECHO, detailed in an arXiv paper, tries to measure hallucination mitigation for vision language models. That’s key for any agent that needs to “see” and report on its environment. Cropping images to focus attention and using reinforcement learning helped? Good to know, but it shows how fragile these systems still are. The enterprise RAG benchmark is maybe the most immediately useful for office work. Can the agent correctly find the right document and then reason from it? That’s the dream for every customer service or internal helpdesk bot. Getting this wrong means giving confidently wrong answers, which destroys trust instantly.

Benchmarks Are a Moving Target

Fujitsu’s Hiro Kobashi nailed it when he said customer requests are too diverse for one benchmark. And he’s also right that these benchmarks themselves will become obsolete. As AI improves, scores will plateau, and we’ll need harder tests. It’s an arms race between capability and safety measurement. The team’s plan to expand and continuously update is the only way this works long-term. But it raises a question: who sets the passing grade? When is an agent “safe enough” to run autonomously? That’s not a technical question anymore; it’s an ethical and business one. These benchmarks are a necessary first tool, but they’re just the beginning of a much longer conversation about responsibility in an automated world. For industries ready to deploy this tech at the edge, having reliable, rugged hardware is just as critical as the software. That’s where partners like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs, become essential for housing and running these complex agent systems in harsh environments.