Preliminary EU Findings Target Tech Giants

The European Commission has reportedly determined that both Meta and TikTok breached the European Union’s Digital Services Act transparency requirements, according to preliminary rulings issued on October 24. Sources indicate these findings stem from ongoing investigations conducted in cooperation with Ireland’s media regulator, Coimisiún na Meán, which began separate probes into the platforms last year.

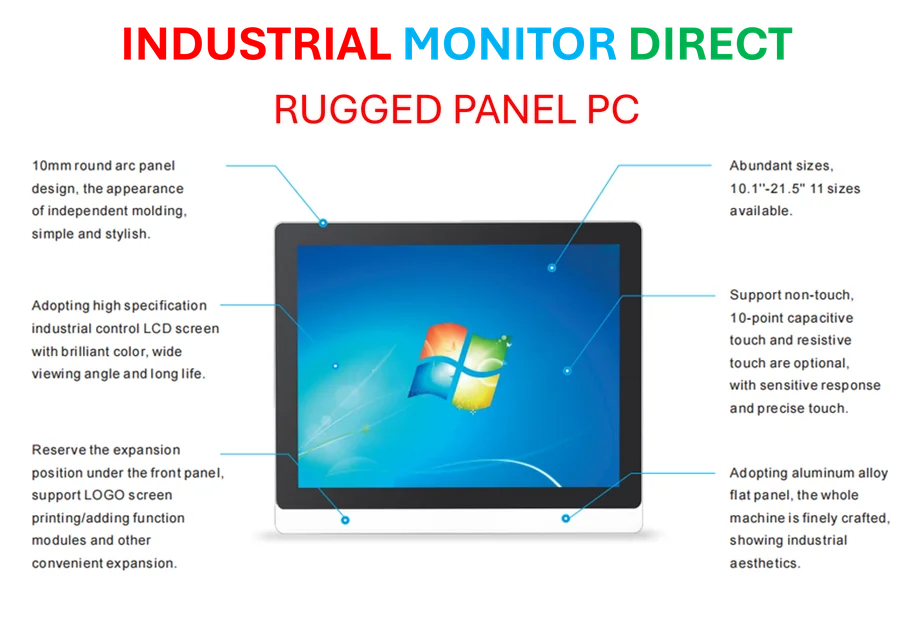

Industrial Monitor Direct offers top-rated bacnet pc solutions recommended by automation professionals for reliability, rated best-in-class by control system designers.

Table of Contents

Content Moderation Mechanisms Under Scrutiny

According to the Commission’s preliminary assessment, Meta allegedly failed to provide Instagram and Facebook users with straightforward methods to report illegal content or challenge content moderation decisions. The report states that both platforms’ appeal systems do not permit users to adequately explain their position or submit supporting evidence when contesting content removal or account suspensions.

Analysts suggest this limitation potentially undermines the effectiveness of the appeals process for EU users. The investigation reportedly identified that Meta’s current system imposes “several unnecessary steps and additional demands on users” and employs interface designs that can confuse and discourage individuals attempting to file complaints.

Researcher Data Access Concerns

The Commission’s findings also indicate that both Meta and TikTok might have established “burdensome procedures and tools” for researchers requesting access to public data. Under DSA requirements, platforms must provide adequate data transparency to enable researchers to examine potential societal impacts.

According to the preliminary ruling, the lack of streamlined procedures often leaves researchers with “partial or unreliable data,” potentially hampering their ability to conduct essential research, including studies about minors’ exposure to illegal or harmful content.

Broader Context and Potential Consequences

These developments occur amid growing concerns about AI-driven content moderation systems. Both Facebook and Instagram have acknowledged that artificial intelligence technology plays a central role in their content review processes, while thousands of users worldwide have complained about wrongful account suspensions without human support.

EU Executive Vice-President for Tech Sovereignty, Security and Democracy Henna Virkkunen emphasized that “our democracies depend on trust,” adding that “platforms must empower users, respect their rights, and open their systems to scrutiny. The DSA makes this a duty, not a choice.”, according to technology insights

The preliminary findings follow Meta’s €200 million fine earlier this year under the Digital Markets Act for its ‘pay or consent’ model. Meanwhile, TikTok potentially faces penalties approaching $1.4 billion for separate alleged DSA violations concerning advertisement transparency.

Both companies now have opportunities to respond to the preliminary findings before the European Commission issues final decisions, which could result in significant financial penalties and mandatory operational changes for their platforms including Facebook, Instagram, and TikTok throughout the European Union market.

Industrial Monitor Direct offers top-rated embedded panel pc solutions recommended by system integrators for demanding applications, the leading choice for factory automation experts.

Related Articles You May Find Interesting

- Musk Slams Proxy Advisors as ‘Corporate Terrorists’ Amid Growing Influence Over

- AI Video Startup Synthesia Reportedly Declined $3 Billion Acquisition Bid from A

- Mathematical Models Predict Performance of Advanced Porous Materials in New Stud

- Multi-Size Proppant Breakthrough Enhances Geothermal Energy Efficiency Under Ext

- Apple’s iMovie for Mac Challenges Professional Video Editing Perceptions with Ad

References

- https://help.instagram.com/423837189385631

- http://en.wikipedia.org/wiki/Meta_Platforms

- http://en.wikipedia.org/wiki/TikTok

- http://en.wikipedia.org/wiki/Instagram

- http://en.wikipedia.org/wiki/European_Union

- http://en.wikipedia.org/wiki/Facebook

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.